MapLibre Architecture

View it here.

This was forked from the mapbox-gl-native wiki commit 1640f7f (Apr 27, 2020).

maplibre-gl-native was forked from d60fd30 which happened on Apr 30, 2020.

The documentation is also valid for maplibre-gl-native. Most of it is also valid for maplibre-gl-js.

Contributing

Issues are disabled in this repo. If you want to contribute directly create a PR.

License

Up until commit 1640f7f:

console.log([][[]])

Changes after 1640f7f:

MIT

Mapbox GL Collision Detection

This document is meant to capture the overall architecture of symbol collision detection at the end of 2017, after making the change to global (e.g. cross-source) viewport-based collision detection.

Mapbox vector tiles contain a collection of features to draw on a map, along with labels (either text or icons, generically called “symbols”) to attach to those features. Generally, the set of labels in a tile is larger than the set of labels that can be displayed on the screen at one time without overlap. To preserve legibility, we automatically choose subsets of those labels to display on the screen for any given frame: this is what we call “collision detection”. The set of labels that we choose can change dramatically as camera parameters change (pitch/rotation/zoom/etc.) because in general the shape of text itself will not be affected the same way as the underlying geometry the text is attached to.

Collision Detection Desiderata

- Correct: Labels don’t overlap!

- Prioritized: More important labels are chosen before less important labels

- Deterministic: viewing the same map data from the same camera perspective will always generate the same results

- Stable: During camera operations such as panning/zooming/rotating, labels should not disappear unless there’s no longer space for them on the screen

- Global: collision detection should account for all labels on the screen, no matter their source

- Smooth: when collisions happen, we should be able to animate fade transitions to remove “collided” labels

- Fast: collision detection should be integrated into a 60 FPS rendering loop, which means synchronous calculations that take more than a few milliseconds are unacceptable. On the other hand, latency of a couple hundred milliseconds in actually detecting a new collision is perceptually acceptable.

Basic Strategy

The core of our collision detection algorithm uses a two-dimensional spatial data structure we call a GridIndex that is optimized for fast insertion and fast querying of “bounding geometries” for symbols on the map. Every time we run collision detection, we go through the set of all items we’d like to display, in order of their importance:

- We use information about the size/style/position of the label, along with the current camera position, to calculate a bounding geometry for the symbol as it will display on the current screen

- We check if the bounding geometry for the symbol fits in unused space in the

GridIndex. - If there’s room, we insert the geometry, thereby marking the space as “used”. Otherwise, we mark this label “collided” and move on to the next label

The “global”, “smooth”, and “fast” desiderata add an important challenge for Mapbox GL because Mapbox maps are frequently combining data from multiple sources, and any change in any of the sources can potentially affect symbols from all of the other sources (because of “cascade” effects, introducing a symbol B that blocks symbol A could open up room for symbol C to display, which could in turn block a previously-showing symbol D…). To manage this challenge, we use a data structure we call the CrossTileSymbolIndex which allows us to globally synchronize collision detection (and symbol fade animation).

GridIndex

The GridIndex is a two-dimensional spatial data structure. Although the data structure itself is context-agnostic, in practice we use a GridIndex in which the 2D plane is the “viewport” — that is, the plane of the device’s screen. This is in contrast to the “tile” or “map” plane used to represent our underlying data. While most map-drawing logic is easiest to process in “map” coordinates, “viewport” coordinates are a better fit for text because in general we prefer text that is aligned flat relative to the screen.

GridIndex holds two types of geometries:

- Rectangles: used to represent “point” labels (e.g. a city name)

- Circles: used to represent “line” labels (e.g. a street name that follows the curve of the street)

We limit ourselves to these two geometry types because it easy to quickly test for the three possible types of intersection (rectangle-rectangle, circle-circle, and rectangle-circle).

Rectangles Rectangles are a fairly good fit for point labels because:

- Text in point labels is laid out in a straight line

- Point labels are generally oriented to the viewport, which means their orientation won’t change during map rotation (although their position will change)

- Multi-line point labels are, as much as possible, balanced so that the lines approximate a rectangle: https://github.com/mapbox/hey/issues/6079

- Text is generally drawn on the viewport plane (i.e. flat relative to the screen). When it is drawn “pitched” (i.e. flat relative to the map), a viewport-aligned rectangle can still serve as a reasonable approximation, because the pitched projection of the text will be a trapezoid circumscribed by the rectangle.

Circles Line labels are represented as a series of circles that follow the course of the underlying line geometry across the length of the label. The circles are chosen so that (1) both the beginning and end of the label will be covered by circles, (2) along the line, the gap between adjacent circles will never be too large, (3) for performance reasons, adjacent circles won’t significantly overlap. The code that chooses which circles to use for a label is tightly integrated with the line label rendering code, and it’s also code we’re thinking of changing: I’ll consider it out of scope for this document.

Representing a label as several circles is more expensive than representing it as a single rectangle, but still relatively cheap. Using multiple circles allows us to approximate arbitrary line geometries (and in fact there are outstanding feature requests that would require us to support other arbitrary collision geometries such as “don’t let labels get drawn over this lake” — we would probably implement support by converting those geometries into circles first).

The key benefit of circles relative to rectangles is that they are stable under rotation. Since line labels are attached to map geometry, they necessarily rotate with the map, unlike point labels, which are usually fixed to the viewport orientation. If we were using small rectangles to represent line labels (as we did before the most recent changes), then the viewport-aligned rectangles would rotate relative to the map during map rotation. The rotation change alone could cause two adjacent line labels to collide, violating our “stability” desideratum. Circles, on the other hand, are conveniently totally unaffected by rotation.

The Grid

The “grid” part of GridIndex is a technique for making queries faster that relies on the assumption that most geometries that are stored in the index will only occupy a relatively small portion of the plane. When we use the GridIndex to represent a (for example) 600px by 600px viewport, we split the 600x600px plane into a grid of 400 (20x20) 30px-square “cells”.

For each bounding geometry we insert, we check to see which cells the geometry intersects with, and make an entry in that cell representing a pointer to the bounding geometry. So for example a rectangle that spans from [x: 10, y: 10] to [x: 70, y: 20] would be inserted into the first three cells in the grid (which would span from [0,0] to [90, 30]).

When we want to test if a bounding geometry intersects with something already in the GridIndex, we first find the set of cells it intersects with. Then, for each of the cells it intersects with, we look up the set of bounding geometries contained in that cell, and directly test the geometries for intersection.

Given the assumption that bounding geometries are relatively uniformly distributed (which is a reasonable assumption for map labels), this approach has an attractive algorithmic property: doubling the dimensions of the index (and thus roughly doubling the number of entries) is not expected to have a significant impact on the cost of a query against the index. This is because for any given query the cost of finding the right cells is near constant, and the number of comparisons to run against each cell is a function of the density of the index, but not its overall size. Since our collision detection algorithm has to test every label for collision against every other label, near-constant-time queries allow us to keep collision detection time linear in the number of labels.

If the cell size is too small, the “fixed” part of a query (looking up cells) becomes too expensive, while if the cell size is too large, the index degenerates towards a case in which every geometry has to be compared against every other geometry on every query. We derived the 30px-square cell size by experimental profiling of common map animations. Conceptually, this is roughly the smallest size at which individual “collision circles” for 16px-high are likely to fit within a single cell and at most four cells. Optimizing for individual circles makes sense because the most expensive collision detection scenario for us is a dense network of road labels, which will be almost entirely represented with circles.

CrossTileSymbolIndex

We satisfy the “smooth” desideratum by using animations to fade symbols in (when they’re newly allowed to appear) and out (when they collide with another label). However, our tile-based map presents a challenge: symbols that are logically separate (e.g. “San Francisco” at zoom level 10 and “San Francisco” at z11) may be conceptually tied together, and the user expects consistent animation between the them (i.e. “San Francisco” should not fade out and fade back in when the z10 version is replaced by the z11 version, and if “San Francisco” is fading in while the zoom level is changing, the animation shouldn’t be interrupted by the zoom level change).

The CrossTileSymbolIndex solves this problem by providing a global index of all symbols, which identifies “duplicate” symbols across different zoom levels and assigns them a shared unique identifier called a crossTileID.

Opacities Uploading data to the GPU is expensive, so to satisfy the “fast” desideratum, the only data we upload as a result of collision detection is a small “symbol opacity”, which is a “current opacity” between 0 and 1, and a “target opacity” of either 0 or 1. The shader running on the GPU is responsible for interpolating between the current opacity and the target opacity using a clock parameter passed in as an argument.

The output of the collision detection algorithm is used to set the target opacity of every symbol to either 0 or 1. Every time the opacities are updated by collision detection, the “clock parameter” is essentially reset to zero, and the CPU re-calculates the baseline “current opacity”. One way to conceptualize this is “if the current opacity is different from the target opacity, a fade animation is in progress”.

Working with the shared, unique crossTileID makes it easy to handle smooth animations between zoom levels — instead of storing the current/target opacity for each symbol, we store it once per crossTileID. Then, at render time, we assign that opacity state to the “front-most” symbol, and assign the rest of the symbols the “invisible” opacity state [0,0].

Consider the “San Francisco” example, in which the label starts a 300ms fade-in while we are at zoom 10, and then we cross over into zoom 11 while the fade is still in progress.

- “San Francisco” at z10 is given

crossTileID12345. It starts fading in, so is given opacity state [0,1]. - z11 tile loads, “San Francisco” is given ID 12345, but at render time given opacity [0,0]

- After 150 ms collision detection runs and opacities are updated. 12345 opacity is updated to [.5,1]

- z10 tile is unloaded. At this point z11 version of “San Francisco” is the front-most symbol for 12345, so it is rendered with [.5,1], allowing the fade-in animation to continue

- After another 150ms, the fade in animation completes (without requiring any opacity updates)

- After another 150ms, collision detection runs again and 12345’s opacity is updated to the “finished” state of [1,1].

This opacity strategy also gives us a way to start showing the data from newly loaded tiles before we’ve had a chance to re-run the global collision detection algorithm with data from that tile: for symbols that share a cross-tile ID with an already existing symbol, we just pick up the pre-existing opacity state, while we assign all new cross-tile IDs an opacity state of [0,0]. The general effect while zooming in is that a tile will start showing with its most important POIs (the ones shared with the previous zoom level), and then some short period of time after the tile loads, the smaller POIs and road labels will start fading in.

Detecting Duplicates

The CrossTileSymbolIndex is a collection of CrossTileSymbolLayerIndexes: duplicates can only exist within a single layer. Each CrossTileSymbolLayerIndex contains, for each integer zoom level, a map of tile coordinates to a TileLayerIndex. A TileLayerIndex represents all the symbols for a given layer and tile. It has a findMatches method that can identify symbols in its tile/layer that are duplicate with symbols in another tile/layer. The query logic is, for each symbol:

- If the query symbol is text, load the set of symbols that have the exact same text (often just one symbol). If the query symbol is an icon, load the set of all icons.

- Convert the query symbol into “world coordinates” (see https://github.com/mapbox/hey/issues/6350) at the zoom level of the index. If the index is at z11 and the query symbol is at z10, the conversion essentially looks like a doubling of the query coordinates.

- Reduce the precision of the coordinates — this is a type of rounding operation that allows us to detect “very close” coordinates as matches

- For each local symbol, test if the scaled coordinates of the query are within a small “tolerance” limit of each other. If so, mark the query symbol as a duplicate by copying the

crossTileIDinto it.

The index is updated every time a tile is added to the render tree. On adding a tile, the algorithm is:

- Iterate over all children of the tile in the index, starting from the highest resolution zoom, and find any duplicates. Then, iterate over all parents of this tile, still in order of decreasing zoom. For each symbol in this tile, assign the first duplicate

crossTileIDyou find. - For each symbol that didn’t get assigned a duplicate

crossTileIDcreate a new uniquecrossTileID - Remove index entries for any tiles that have been removed from the render tree.

A common operation is to replace a tile at one zoom level with a tile at a higher or lower zoom level. In many cases, the two tiles will both render simultaneously for some period before the old tile is removed, but it is also possible for there to be an instantaneous (i.e. single frame) swap between tiles in the render tree. In this case, an important property of the above algorithm is that the duplicate detection in step (1) happens before the unused tile pruning in step (3).

(this article is mostly a stub)

Mapbox GL's threading structure is built around the concept of message passing. In GL JS, this is enforced by the WebWorker interface, while in gl-native we implement message passing using the Actor interface. In GL JS efficient transfer between threads requires serializing to raw TypedArrays, while in native we can simply transfer ownership of pointers. The relative difficulty of transferring ownership on the JS side leads to some architectural compromises (for instance, on native, any worker thread can pick up work on a tile, whereas on the JS side tiles are coupled to individual workers in order to hold onto intermediate state). Native also takes advantage of the Immutable pattern to allow read-only sharing between threads.

All versions of GL have a pool of "workers" that are primarily responsible for background tile generation, along with a "render" thread that continually renders the current state of the map using whatever tiles are currently available. On JS and iOS, the render thread is the same as the foreground/UI thread. For performance reasons, the Android render thread is separated from the UI thread -- changes to the map made on the UI thread are batched and sent to the render thread for processing. The platforms also introduce worker threads for various platform-specific tasks (for instance, running HTTP requests in the background), but in general the core code is agnostic about where those tasks get performed: it sends a request and runs a callback when it gets a response. The platform implementations of CustomGeometrySource currently run their own worker pools, but I think we should probably get rid of them: https://github.com/mapbox/mapbox-gl-native/issues/11895.

Each platform is required to provide its own implementation of concurrency/threading primitives for gl-core to use. The platform code outside of core is also free to use its own threading model (for instance, the iOS SDK uses Grand Central Dispatch for running asynchronous tasks).

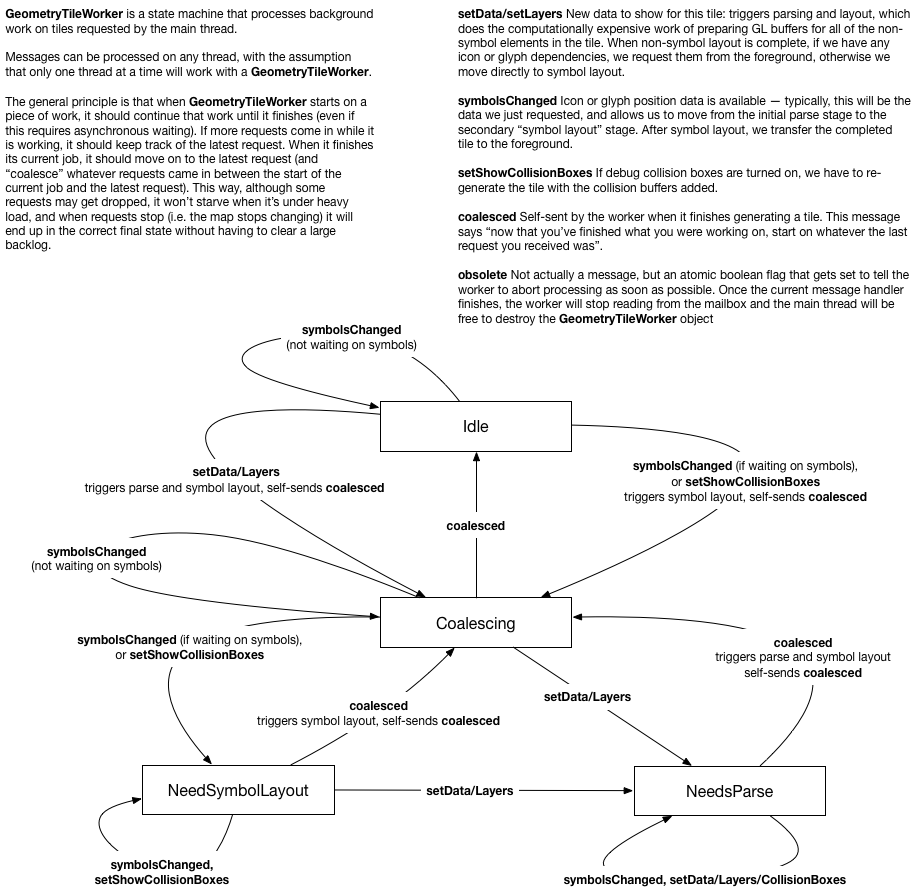

Geometry Tile Worker

Expression Architecture

This document is meant to be an introduction to working with “expressions” in the Mapbox GL codebase. It uses gl-js examples, but the gl-native implementation is usually closely analogous (with a few layers of templating on top). I wasn’t very involved in the original design discussions, so this is mostly a distillation of what I’ve learned in the process of extending the expression language with the “collator” and “format” types. In my experience, the expression language itself is not as hard to understand as its embedding within the rest of the map.

Background

Key Mapbox GL concepts from pre-expression days:

- “Layout” properties in the style spec are properties that are calculated at tile generation time, in the background. e.g. 'text-font': ["DIN Office Pro", "Arial Unicode MS"]

- “Paint” properties are calculated at render time, and can generally be updated much more quickly. Whether something is a paint or layout property often just depends on the implementation difficulty of calculating the property at run time. e.g. 'circle-radius': 20

- There are monster hybrids, like

text-size, which is a “layout” property but is also evaluated at render time. - “Data-Driven Styling (DDS)” This is the ability to generate layout and paint properties separately for each individual feature in a layer, based on (1) zoom and (2) feature properties.

- “Run-Time Styling” The ability to change the style at run-time. Not directly relevant to expressions except that changes require re-evaluating expressions. Changes to paint properties can be made immediately, while changes to layout properties require re-parsing tiles.

- “Function” This is the pre-expression syntax for DDS. “functions” exposed a fixed set of interpolation functions that could map input parameters to output parameters. Functions are still supported, but under the hood we simply convert them into equivalent general purpose “expressions”. Example: 'circle-radius': { property: 'population', stops: [ [{zoom: 8, value: 0}, 0], [{zoom: 8, value: 250}, 1], [{zoom: 11, value: 0}, 0], [{zoom: 11, value: 250}, 6], [{zoom: 16, value: 0}, 0], [{zoom: 16, value: 250}, 40] ] }

- “Layer” Know your “source layer” (this is a layer of data in a vector tile) from your “style layer” (this is a layer in the style sheet, you could also think of this as a “rendered” layer)

- “Filter” A filter expresses rules for filtering features from a source layer to be included or excluded from a style layer. Before expressions, filters used their own mini-language in the style spec JSON. Pre-expression filters are now converted into expressions before being evaluated.

- “Interpolation” We support linear, categorical, exponential, and cubic-bezier interpolation functions, both before and after expressions.

- “Constant" vs. “Zoom-Dependent” vs. “Property-Dependent” vs. “Zoom-and-Property-Dependent” Every property falls into one of these categories, both before and after expressions. Typically different code pathways are used for each category, with the most complex/expensive code pathway for zoom-and-property-dependent paint properties. In expression terms, you will see the equivalent isFeatureConstant or isZoomConstant, and also CameraExpression (zoom) SourceExpression (property), and CompositeExpression (zoom-and-property).

- “Paint Property Binders" These are a complex piece of

magiclogic that support rendering of property-dependent and zoom-and-property-dependent features within minimal render-time calculation. Hand-wavy, not-quite-accurate explanation: For each “bound” property (for example:circle-radius), and for each feature in the layer, there’s an entry recording the value for that feature evaluated attileZoomand also evaluated attileZoom + 1. At render time, an interpolation parameter is calculated based on the current zoom and the interpolation curve, and then passed to the shader.- This approach doesn’t change with expressions, which explains this limitation from the docs: “… in layout or paint properties,

["zoom"]may appear only as the input to an outer[interpolate](https://www.mapbox.com/mapbox-gl-js/style-spec/#expressions-interpolate)or[step](https://www.mapbox.com/mapbox-gl-js/style-spec/#expressions-step)expression, or such an expression within a[let](https://www.mapbox.com/mapbox-gl-js/style-spec/#expressions-let)expression.” - For more detail, see

program_configuration.js, specifically theBinderinterface

- This approach doesn’t change with expressions, which explains this limitation from the docs: “… in layout or paint properties,

- “Tokens” e.g.

{name_en}. The pre-expression way to refer to per-feature data in vector tiles. Before expressions, these could only be used in specialized contexts (like thetext-fieldproperty). After expressions, tokens are automatically converted into “get” expressions, e.g.[``"``get``"``,"``name_en``"``].

Design Goals

- Complete the work of DDS in exposing data in vector tiles as input for styling, without falling back to implementing one specialized “function” after another. The original name for this feature was “arbitrary expressions” — representing the alternative of implementing one expression at a time.

- See https://github.com/mapbox/mapbox-gl-js/issues/4077 for some background

- Allow arbitrary logical and mathematical operations on per-feature data

- Do not allow looping, turing-completeness, etc. The idea is to make a declarative language in which it should be relatively difficult to shoot yourself in the foot. We don’t want style authors to be able to make a layer whose cost to evaluate scales super-linearly with the number of features in the layer.

- Can easily be extended with new “vocabulary” over time

- Can be embedded in existing style spec

- Can port all existing functionality to expressions

- Minimal evaluation overhead

The JSON/”lispy” syntax we went with satisfied the above goals, and had the attraction that the written form of expressions stayed close to the underlying AST. Our users are generally not excited about learning a new DSL to modify styling options — but one way to think of this is that the expression language itself can be a target of other, more appropriate DSLs. For example, on the iOS SDK, we wrap expressions in the platform-appropriate [NSExpression](https://www.mapbox.com/ios-sdk/api/4.6.0/predicates-and-expressions.html) syntax. In Studio, we use Jamsession to make a simplified expression builder.

Implementation

For each filter or property that uses an expression, we parse the raw JSON using a “parsing context” for that property. The result is a parsed expression tree, which is then embedded in a PropertyValue. At evaluation time, we provide an “evaluation context” and then evaluate the expression tree starting from the root. The result will be a constant value matching the property’s type.

Parsing

The root of the parsing logic is in parsing_context.js. You start parsing with a mostly empty context (it contains information such as the expected type of the result, which is used in some automatic coercion logic). The first item in an expression array is the name (or “operator”) of the expression (e.g. "``concat``"). The parser looks up the Expression implementation for that operator, and then hands parsing off to the class. Each implementation has its own logic for parsing children: if it accepts arguments that are themselves expressions, it will recurse into the root parsing logic, but with added context (for instance, expected types, bound "``let``" variables, etc.).

CompoundExpression

Most expressions don’t need any special parsing rules beyond knowing their return type, their argument types, and any argument type overloads. All of these expressions are implemented using CompoundExpression. Example (from definitions/index.js):

'^': [

NumberType,

[NumberType, NumberType],

(ctx, [b, e]) => Math.pow(b.evaluate(ctx), e.evaluate(ctx))

],

This says "``^``" is an expression that returns a number, and it expects as arguments two expressions that return numbers (they could be constant expressions). The evaluator evaluates both child expressions, and then applies Math.pow to the results. ctx is the “evaluation context” getting passed through — a child expression might use it to know the current zoom level, or to look up a feature property, etc.

Types, Assertions, and Coercions The expression language has parse time and run-time type checking, based on this set of types:

export type Type =

NullTypeT |

NumberTypeT |

StringTypeT |

BooleanTypeT |

ColorTypeT |

ObjectTypeT |

ValueTypeT |

ArrayType |

ErrorTypeT |

CollatorTypeT |

FormattedTypeT

An assertion is a type of expression that allows you to give a return type to something that doesn’t have a type. So for instance [``"``get``"``, "``feature_property``"``] returns the generic ValueType, but if you want to pass it to an expression that requires a string argument, you can use an assertion: [``"``string``"``, [``"``get``"``, "``feature_property``"``]]. Assertions throw an evaluation-time error if the types don’t match during evaluation.

A coercion is a type of expression that allows you to convert return types. You can provide fallbacks in case coercion fails. e.g. [``"``to-number``"``, [``"``get``"``, "``feature_property``"``], 0].

The initial implementation of the expression language erred on the side of requiring users to be explicit about types — for instance, "``get``" expressions very frequently had to be wrapped in assertions. In response to user feedbacks, we started building more “implicit” typing into the parsing engine. This is accomplished by automatically adding Assertion and Coercion expressions at parse time (they are called “annotations”). The basic rules are:

// When we expect a number, string, boolean, or array but have a value, wrap it in an assertion.

// When we expect a color or formatted string, but have a string or value, wrap it in a coercion.

Constant Folding

There’s not much compile-time optimization in expressions, but one thing we do at compile time whenever we parse a sub-expression, we check if it’s “constant” (i.e. it doesn’t depend on any evaluation context). If so, we evaluate the expression, and then replace it with a Literal expression containing the result of the evaluation.

Evaluation

Evaluation is really pretty simple — you call “evaluate” on the root expression, it recurses, and eventually gives you back either a result or an error. The somewhat tricky part is the provided EvaluationContext, which hooks the expression language up to actual data on the map. It contains:

GlobalProperties: global map state, like the current zoom levelFeature: if this expression is being evaluated per-feature, this is the actual data for the feature from the underlying vector-tileFeatureState: Global “feature state” index, if necessary for this expression

Property, PropertyValue, and PossiblyEvaluatedValue, oh my…

This is technically outside the expression language, but understanding how style properties are hooked up to expressions is key to understanding how expressions are actually used. properties.js has lots of documentation! To start getting oriented:

Propertyrefers to a property in the style specification. e.g.'``circle-radius``'PropertyValuerefers to the right hand-side of a property in the style sheet. e.g.'``circle-radius``'``: 20. It can be a constant value or an expression.PossiblyEvaluatedValueis all about not re-evaluating when you don’t need to. So if you have a “Property” that’s of type “DataDrivenProperty”, it will have a “PossiblyEvaluatedValue”. But for instance if that value is an expression and it’s “feature-constant”, then we can just store the value here and return it in future calls topossiblyEvaluateinstead of continually re-evaluating the expression.

Adding a New Expression

Adding a new expression is actually pretty easy, as long as you don’t have to modify the type system. If it fits the parsing pattern of CompoundExpression, then you can just add it to the CompoundExpression registry, with a custom evaluation function. If not, well let’s see how to implement a “Foo” expression!

Register the operator in definitions/index.js:

const expressions: ExpressionRegistry = {

...,

'foo', FooExpression

};

Create an implementation file at definitions/foo.js:

export default class FooExpression implements Expression

Implement static parse logic that’s used to create instances of the expression:

static parse(args: Array<mixed>, context: ParsingContext) {

// Here's where you enforce syntax -- if type checking fails, you pass

// the error back up the chain with context.error

if (args.length !== 2)

return context.error(`'foo' expression requires exactly one argument.`);

if (!isValue(args[1]))

return context.error(`invalid value`);

const child = (args[1]: any);

return new FooExpression(child);

}

Implement the evaluate method:

evaluate(ctx: EvaluationContext) {

// We don't use the context, but pass it through to our child

return "bar" + this.child.evaluate(ctx);

}

Implement the eachChild method — this is necessary for various algorithms that traverse the expression tree:

eachChild(fn: (Expression) => void) {

fn(this.child);

}

Implement the possibleOutputs method — this is used to do a simple type of static analysis for expressions that have a finite number of possible outputs (for instance, if the top-level expression is a "``match``" expression with three literal outputs, the only possible outputs are those three, no matter what goes on in the sub-expressions. Some properties are required to have a defined set of possible outputs (for instance text-font), because we need to be able to fetch them ahead of evaluation time. If your expression depends on external state, it could very easily have an infinite number of potential outputs, in which case simply return [undefined].

possibleOutputs() {

// Cop-out!

return [undefined];

}

Finally, implement the serialize method — this is basically the inverse of the parse method. The serialized result may not look identical to the original input that created an expression (because of changes like constant folding), but when evaluated it should give the same result (and in fact our test harness asserts that):

serialize() {

return ["foo", this.child.serialize()];

}

You’re done! Although you should head straight over to test/integration/expression-tests, find an expression that’s similar to yours, copy its tests, and modify them to fit yours. An expression test has an:

- “expression”: of course

- “inputs”: array of Feature/GlobalProperties pairs

- “expected”

- “compiled”: type information

- “outputs”: evaluation results or errors

- “serialized”: result of round-tripping the expression through parse/serialize

GL Text Rendering 101

This document is intended as a high level architectural overview of the path from a text-field entry in a style specification to pixels on a screen. The primary audience is new maintainers or contributors to mapbox-gl-native and mapbox-gl-js, although it may also provide useful context for power users of Mapbox GL who want to know how the sausage is made.

Native and JS share very similar implementations, with occasionally different naming conventions. I will use them mostly interchangeably. For simplicity, I focus on text, but icons are a very close analog — for many purposes, you can think of an icon as just a label with a single “glyph”.

Motivation

Why are we rolling our own text rendering in the first place, when browsers and operating systems already provide powerful text capabilities for free? Basically:

- Control: To make beautiful interactive maps, we’re changing properties of the text in label on every frame

- Size/scale

- Relative positioning of glyphs (e.g. following a curved line)

- Paint properties

- Speed: If we asked the platform to draw the text for us and then uploaded the raster results to textures for rendering on the GPU, we’d have to re-upload a large amount of texture data for every new label, every change in size, etc. By rolling our own text rendering, we can take advantage of the GPU to efficiently re-use/re-combine small constituent parts (glyphs)

Legible, fast, and beautiful is the name of the game.

For some history on how this took shape, check out Ansis' "label placement" blog post from 2014 (many implementation details have changed, but the goals are the same).

Requesting glyphs: text-field + text-font → GlyphDependencies

Imagine a toy symbol:

"layout": {

"text-field": "Hey",

"text-font": [

"Open Sans Semibold",

"Arial Unicode MS Bold"

]

}

The text-font property is specified as a fontstack, which looks like an array of fonts, but from the point of view of GL is just a unique string that’s used for requesting glyph data from the server. Font compositing happens on the server and is totally opaque to GL.

At tile parsing time, the symbol parsing code builds up a glyph dependency map by evaluating text-field and text-font for all symbols in the tile and tracking unique glyphs:

GlyphDependencies: {

"Open Sans Semibold, Arial Unicode MS Bold": ['H', 'e', 'y'],

"Open Sans Regular, Arial Unicode MS": ['t', 'h', 'e', 'r', 'e']

}

It’s worth noting here that our current code treats “glyph” and “unicode codepoint” interchangeably, although that assumption won’t always hold.

Once all the dependencies for a tile have been gathered, we send a getGlyphs message to the foreground, and wait for a response before moving on to symbol layout (unless the worker already has all the dependencies satisfied from an earlier request).

native

SymbolLayout constructor: symbol_layout.cpp

js

SymbolBucket#populate: symbol_bucket.js

GlyphManager

The GlyphManager lives on the foreground and its responsibility is responding to getGlyphs requests with an SDF bitmap and glyph metrics for every requested glyph. The GlyphManager maintains an in-memory cache, and if all the requested glyphs are already cached, it will return them immediately. If not, it will request them.

Server Glyph Requests Every style with text has a line like this:

"glyphs": "mapbox://fonts/mapbox/{fontstack}/{range}.pbf"

This tells the GlyphManager where to request the fonts from (mapbox://fonts/… will resolve to something like https://api.mapbox.com/…). For each missing glyph, the GlyphManager will request a 256-glyph block containing that glyph. The server is responsible for providing the block of glyphs in a Protobuf-encoded format. Requests to api.mapbox.com (and probably most requests to custom-hosted font servers) are ultimately served by node-fontnik.

When the response comes back over the network, the GlyphManager will upload all the glyphs in the block to its local cache (including ones that weren’t explicitly requested). Once all dependencies are satisfied, it will send a response back to the worker.

Local Glyph Generation

The server request system above works pretty well for Latin scripts — usually there’s just one 0-255.pbf request for every fontstack. But it’s a disaster for ideographic/CJK scripts. That’s why we implemented local CJK glyph generation.

When local glyph generation is enabled, it’s the glyph manager who’s responsible for choosing whether to go to the server or generate locally, and the rest of the code doesn’t have to worry about it.

GlyphAtlas

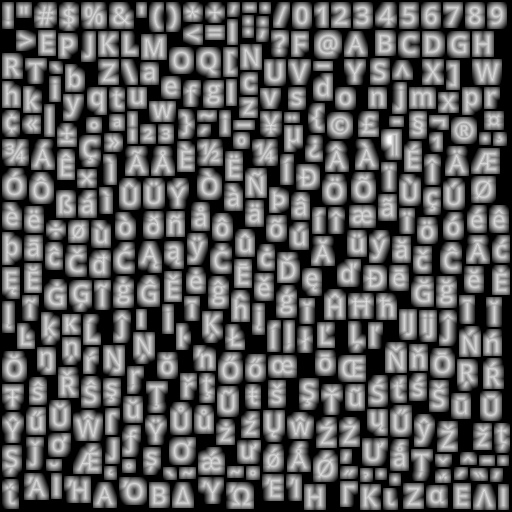

Once the worker receives glyph information from the foreground, it builds a GlyphAtlas out of every glyph that will be used on a tile. The glyph atlas is a single image that will be uploaded as a texture to the GPU along with the rest of the data for the tile. Here’s a visual representation of a glyph atlas:

Once we’ve uploaded the glyph atlas to the GPU, we can represent each glyph we want to draw as two triangles, where the vertices encode both physical locations on the map but also a “lookup” location within the glyph atlas texture.

The raster format of the glyph is not a typical grayscale encoding — instead, it’s a “signed distance field” (SDF), a raster format in which the value of each pixel encodes how far away it is from the “edge” of the glyph. SDFs make it easier for us to do things like dynamically resize text or draw “halos” behind text. For an in-depth description, read Konstantin’s SDF devlog.

Symbol Layout

At the time we parsed the tile, we created one SymbolBucket for each set of symbol layers that shared the same layout properties (typically there is a 1:1 mapping between style layers and symbol buckets — but when two style layers differ only in their paint properties we share their layout work as an optimization). After parsing, the bucket contains a list of “features” that point into the raw vector tile data.

“Layout” is the process of turning those raw features into GL buffers ready for upload to the GPU, along with collision metadata (the FeatureIndex). The process starts by iterating over every feature, evaluating all of the style properties against that feature, and generating anchor points for the resulting symbol. Then we move on to shaping…

native

SymbolLayout::prepareSymbols: symbol_layout.cpp

js

performSymbolLayout: symbol_layout.js

Shaping

Shaping is the process of choosing how to position glyphs relative to each other. This can be a complicated process, but the basics are pretty simple — we start by placing our first glyph at some origin point, then we advance our “x” coordinate by the “advance” of the glyph, and place our second glyph. When we come to a line break, we reset our “x” coordinate and increment our “y” coordinate by the line height.

We do shaping in a coordinate system that’s independent for each symbol — basically the positions are always relative to the symbol anchor. For symbol-placement: point, each glyph gets an “x” and “y” coordinate. For symbol-placement: line, we always lay out in a single line and essentially collapse to just “x” coordinates (at render time, we use the line geometry to calculate the actual position of each glyph).

Line Breaking The shaping code is responsible for determining where to insert line breaks. The algorithm we use tries to find a set of line breaks that will make all lines of a label have relatively similar length. For details on the algorithm (and why this is important for CJK labels), see my blog post on line-breaking.

BiDi and Arabic “Shaping” The shaping code is also responsible for applying the Unicode Bidirectional algorithm to figure out how to interleave left-to-right and right-to-left text (it’s actually intertwined with line breaking). It’s actually not responsible for the thing we call “arabic shaping”, which is actually a codepoint substitution hack implemented in the earlier tile parsing stage. For more details, see my blog post on supprting Arabic text.

Vertical Text Layout We have support for rotating CJK characters when they’re following a line that’s within 45 degrees of vertical. The way the implementation works is to run shaping twice: once in horizontal mode, and once in vertical mode (where CJK characters are rotated, but non-CJK characters aren’t). We upload both shapings to the GPU, and at render-time we dynamically calculate the orientation of each line label (based on current camera position), and based on that toggle which set of glyphs to hide/show.

Variable Text Anchors We’ve just added the ability for symbols to automatically change their anchor position to find a place to “fit” on the map. If changing the anchor may also require the text justification to change, we generate an extra shaping for each possible justification, and choose which set of glyphs at render time.

native

getShaping: shaping.cpp

js

shapeText: shaping.js

Generating Quads

The process of going from a shaping to GL buffers is pretty straightforward. It’s just a matter of taking each rectangular glyph from the shaping and turning it into two triangles (called a “quad”). We apply transforms like text rotation here, but essentially the way to think of it is just a translation between different in memory-representations of the same data.

After the buffers are generated, background work is essentially done, and we transfer all the buffers for our tile to the foreground, so it can start rendering.

native

quads.cpp and SymbolLayout::addSymbol

js

quads.js and SymbolBucket::addSymbol

The Foreground

All of the work up to this point happened in the background — if it takes longer to run, it means tiles take longer to load. Background work has to be fast relative to the cost of loading a tile from the network (or disk). If a tile can parse in 50ms, that’s fine. On the foreground, anything that takes more than a few millisecond will cause ugly, janky rendering.

Every 16ms, the render thread is responsible for drawing a frame with the contents of the currently loaded tiles. For symbols, this involves a few components:

- Collision detection (running every 300ms)

- Per-layer CPU work (mainly updating line label positions)

- Per-layer GPU draw calls (running the shaders)

We originally did collision detection and line label layout on the background (along with the GPU), but despite the strict limits on render-thread CPU time, we moved them to the foreground over the course of 2017. Why?

- Each symbol instance comes from a specific tile, but symbols render across tile boundaries and can collide with each other across tile boundaries. Doing collision detection properly required global collision detection, and the foreground is the one place that has all the data available.

- Text is way more legible laid out on the plane of the viewport, but background layout is necessarily done on the plane of the map, and the GPU has limited capability to re-do layout, since it has to handle each vertex in isolation from all the other vertices that make up a label.

The cumulative effect of the "pitched label quest" of 2017 was a dramatic improvement in the legibility of our maps in pitched views:

| Before: gl-js v0.37, streets-v9 | After: gl-js v0.42, streets-v10 |

|---|---|

|  |

Basics of Collision Detection (aka “Placement”)

To understand how our collision detection works, dig into the Collision Detection article.

The basic idea is that we represent each symbol as a box (for point labels) or as a series of circles (for line labels). When we run collision detection, we start with our highest priority feature, add its geometry to a 2D spatial index, and then move on to the next feature. As we go through each feature, we only add features that don’t collide with any already-placed features.

Fading After collision detection finishes, we start fading in newly placed items and fading out newly collided items. We do this by uploading “opacity buffers” to the GPU for every symbol layer. For every symbol, we encode the current opacity and the “target” opacity (either 1 or 0). The shader then animates the fading by interpolating between “current” and “target” based on the global clock.

CrossTileSymbolIndex

We use a data structure called the CrossTileSymbolIndex to identify symbols that are “the same” between different tiles (“same” ~ “similar position and same text value”). This is important for smooth zooming without symbols flickering in and out — instead of having to re-run collision detection whenever we load a new tile in order to figure out what we can show, we just match the symbols in the new tile against previously shown symbols, and if we find a match we use the same opacity information for the new symbol (until the next collision detection runs).

Pauseable Placement JS has a feature that splits collision detection across multiple frames, with a ~2ms limit for each frame. This adds complexity to the code, and on the native side we got acceptable performance without this feature, so we never implemented it.

Symbol Querying

We have a long tradition of implementing new features and only when we think we’re finished realizing that we broke the queryRenderedFeatures functionality. Symbol querying is built on top of the CollisionIndex — we translate queries into the coordinates used by the collision index, get a list of symbols that intersect with the query coordinates, and then look up the data for the underlying features in the underlying (per-tile) FeatureIndex.

native

placement.cpp and cross_tile_symbol_index.cpp

js

placement.js and cross_tile_symbol_index.js

Render-time layout for line labels

Labels on curved lines in a pitched view are tricky. We want the glyphs to have consistent spacing in the viewport plane, but we want the glyphs to follow lines that are laid out in the tile plane, and the relationship between tile coordinates and viewport coordinates changes on every frame.

We brute-force the problem. For every line label, we transfer not only its glyphs to the foreground, but also the geometry of the line that it’s laid out on. At render time, we start at the anchor point for the label, and figure out where the ends of that line segment end up in viewport space. If there’s enough space for the label, we lay it out along that projected line. Otherwise, we keep moving outward, one line segment at a time, until there’s enough room for the whole label, and for each glyph we calculate x/y coordinates based on where it fits on the projected lines. Then, we upload all of this layout data to the GPU before drawing.

This is expensive! On dense city maps, it shows up as our biggest render-thread expense. But it also looks good.

We also use this render-time line projection logic to determine which “collision circles” should be used for a label (as you pitch the map, vertically oriented labels essentially get “longer”, so they need to use more collision circles). It’s a historical accident that we create these collision circles on the background and then dynamically choose which ones to use on the foreground — if we were starting fresh, we would just generate the collision circles on the foreground.

native

See reprojectLineLabels in render_symbol_layer.cpp

js

See symbolProjection.updateLineLabels in draw_symbol.js

Perspective Scaling

What’s up with this formula: 0.5 + 0.5 * camera_to_center / camera_to_anchor?

It’s a technique for making far-away text somewhat easier to read, and to keep nearby text from taking up too much space. After a lot of prototyping and over-design, Nicki and I decided on a simple rule: “all text in pitched maps should scale at 50% of the rate of the features around it”. So if your label is over a lake in the distance that is drawn at 25% of the size it would be in the center of the map, your text should be drawn at 50% of the size it would be in the center of the map.

In the formula, “camera to center” is the distance (don’t worry about the units, although they’re kind of pixel-based) to the point in the center of the viewport. This is a function of the viewport height and the field of view. “camera to anchor” is the distance to the anchor point of a symbol, in the same units. In an un-pitched map, “camera to anchor” is the same as “camera to center” for every point (if you think about this, it’s not actually the same as if you were staring down at a paper map flat on the table!).

| Before: Dense, hard-to-read labels in the distance | After: Fewer but larger labels in the distance |

|---|---|

|  |

Units, planes, and projections

Understanding or modifying text code requires a firm grasp of the units involved, and it’s easy to lose track of which you’re working with:

- point The internal unit of glyph metrics and shaping

- Converted to pixels in shader by

fontScale

- Converted to pixels in shader by

- em For us, an “em” is always 24 points.

- When we generate SDFs, we always draw them with a 24 point font. We then scale the glyph based on the

text-size(so a 16 point glyph for us is just 2/3 the size of the 24 point glyph, whereas the underlying font might actually use different glyphs at smaller sizes) - Commonly used for text-related style spec properties

- https://en.wikipedia.org/wiki/Em_(typography)

- When we generate SDFs, we always draw them with a 24 point font. We then scale the glyph based on the

- pixel Self explanatory, right?

- Except these are kind of “imaginary” pixels. Watch out for properties that are specified in pixels but are laid out in tile-space. In that case we may transform them to something that’s not literally a pixel on screen

- See

pixelsToGLUnitsandpixelsToTileUnits

- tile unit value between 0 and tile EXTENT (normalized to 8192 regardless of extent of source tile)

- This is the basic unit for almost everything that gets put in a GL buffer. The transform form a tile converts tile units into positions on screen.

- Assuming 512 pixel tile size and 8192 EXTENT, each tile unit is 1/16 pixel when the tile is shown at its base zoom and unpitched. See

pixelsToTileUnitsfor conversion logic at fractional zoom, and of course in pitched maps there’s no direct linear conversion.

- gl coordinates/NDC [-1, 1] coordinates based on the viewport

- NDC or “normalized” form is just gl-coord x,y,z divided by the “w” component.

u_extrude_scaleis typically used in the shaders to convert into gl units

When you modify a point, you always want to use units that match the coordinate space that you’re currently working in. “Projection” transform points from one coordinate space to the next.

- Tile coordinate space

- Use tile units

- Different tiles have different coordinates, but they’re all in the “plane” of the map (that is, the potentially-pitched “surface”)

- Map pixel coordinate space

- Use pixel units

- Transformed from tile units to pixels, but still aligned to the map plane. May be rotated

- Viewport pixel coordinate space

- Use pixel units

- Aligned to viewport (e.g. “flat” relative to viewer)

- GL coordinate space

- Use gl units

- Aligned to viewport

In the text rendering code, the labelPlaneMatrix takes you from tile units to the “label plane” (the plane on which your text is drawn), and then the glCoordMatrix takes you from the label plane to the final gl_Position output. The order of projection depends on what you’re drawing — the two most common cases are:

- Viewport-aligned line labels: use

labelPlaneMatrixto project to viewport-pixel coordinate space in CPU, do layout, pass “identity”labelPlaneMatrixto shader, then applyglCoordMatrixin shader. - Viewport-aligned point labels: pass tile→viewport-pixel

labelPlaneMatrixto shader, which applies projection, does pixel-based layout, then projects to GL coords.

For more details on the coordinate systems, see the comments at the header of: projection.js

For a more detailed walk-through of how we transform points, see Mapbox GL coordinate systems. Or, just to develop an intuition, play with this interactive demo.

How to love the symbol_sdf shader

Phew, we’re finally at the point where something gets drawn on the screen!

Vertex Shader The vertex shader is responsible for figuring out where the outer edges of each glyph quad go on the screen. Its input is basically: an anchor, an offset, and a bunch of sizing/projection information.

There are a few steps:

- Figuring out the “size”

- works differently depending on whether the property is zoom or feature-dependent

- Figuring out the “perspective ratio”

- Based on projecting

a_pos, which is the anchor for the symbol as a whole. Easy to confuse witha_projected_pos, which in the case of line labels can be the CPU-projected position of a single glyph (while in the case of point labels it’s the same asa_pos).

- Based on projecting

- Offsetting the vertex from the label-plane

projected_pos, including rotation as necessary - Calculating opacity to pass to fragment shader

- Projecting to gl coordinates, passing texture coordinates to fragment shader

Fragment Shader The fragment shader is given a position within the glyph atlas texture, looks it up, and then converts it into a pixel value:

alpha = smoothstep(buff - gamma_scaled, buff + gamma_scaled, dist);

👆 dist is the “signed distance” we pull out of the texture. buff is basically the boundary between “inside the glyph” and “outside the glyph” (dirty secret: we call them “signed distance field”, but we actually encode them as uint8s and treat 192-255 as “negative”). gamma_scaled defines the distance range we use to go from alpha 255 to alpha 0 (so we get smooth diagonal edges).

The fragment shader also applies paint properties in a fairly obvious way.

Icons

The astute will note that symbol_icon has a nearly-identical vertex shader, while its fragment shader does lookup in an RGBA texture instead of an SDF. The wise will also know that GL supports uploading SDF icons, so sometimes icons are actually rendered with symbol_sdf.

Tada! 🎉

Aww… crap. When something doesn’t work in the shader, you’re lucky if the result is this 👆 legible. You can’t debug directly in the shader, so how can you tell what’s going wrong?

- Check your units!

- Pause right before the call to

gl_drawElements, examine all the inputs, and try simulating the shader code by hand with pen and paper for one or two vertices. This is fun and doing it regularly will probably reduce your risk of getting dementia when you’re older. - Use an OpenGL debugger (such as WebGL Inspector extension in Chrome) to capture all the draw calls going into a frame. This is good for eyeballing things like “what’s in the texture I just uploaded”. You can also use it to look at the contents of the buffers being used in the draw call, although in my experience it’s easier to inspect them on the CPU side.

- Write debugging code in the shader — the tricky thing is getting creative with figuring out how to extract debug information by drawing it onscreen. The “overdraw inspector” is a simple but very useful example of this approach.

Mapbox GL Coordinate Systems

TL;DR: Unsure how a point on a map gets transformed from a WGS84 latitude/longitude into x and y coordinates of a pixel on your screen?

Love matrix mathWilling to skim past a bunch of math? Read on…Just want to see some of the guts of Mapbox GL without any context? Check out the coordinate transformation demo I made

For the last two months, I've been working on making labels easier to read on pitched maps, and as my first time really working with 3D graphics, it's been a crash course in trigonometry and linear algebra. The work brought back a surprising number of pleasant memories from high school trig class, and made me grateful for all the teachers (from Mr. Hatter twenty years ago to our local math teacher @anandthakker today) who have worked to prepare me for this job!

3D graphics are all about transforming points between different coordinate systems, and as I followed our code to figure out how our transformations worked, I kept getting tripped up because I didn't realize which coordinate system the code was using. In this devlog, I'm trying to answer the question I didn't know I needed to ask when I started: what coordinate systems do we use in Mapbox GL to turn a bunch of points defined by latitude and longitude into a bunch of points with pixel x and y coordinates on your screen?

If you’re working directly on Mapbox GL code, this is important stuff to know: otherwise, these implementation details are for the most part mercifully hidden.

I'll start where @lyzidiamond's awesome devlog on projections and coordinate systems left off: WGS84 latitude and longitude.

Where's the Washington Monument?

Let's follow how one point gets transformed through our different coordinate systems. The Washington Monument is located at approximately (longitude: -77.035915, latitude: 38.889814) in the WGS84 coordinate system. What happens if we ask Mapbox GL to draw a cat at just that point?

There are five coordinate systems we'll move through:

- WGS84

- World Coordinates

- Tile Coordinates

- GL Coordinates

- Screen Coordinates/NDC

Projecting WGS84 to Web Mercator/"World Coordinates" As @lyzidiamond pointed out, computers love squares, so our first step is to take those spherical coordinates and turn them into coordinates on a rectangular 2D world (aka a "Pseudo-Mercator Projection"). Our particular projection imagines the world as a giant grid of pixels with the size:

worldSize = tileSize * number of tiles across one dimension

We use a tile size of 512 (roughly: this means a tile is 512 pixels square, although that would only be true at the base zoom level and without any pitch). I'll assume we're rendering tiles at zoom level 11 (which means there are 2^11 tile positions across both dimensions). That gives us:

worldSize = 512 * 2^11 = 1048576

Then getting an x coordinate is easy, we just shift by 180˚ (because we start with x=0 at -180˚) and multiply by the world size:

x coord = (180˚ + longitude) / 360˚ * worldSize

= (180˚ + -77.035915˚) / 360˚ * 1048576

= 299,904

For the y coordinate, we apply the funky y stretch that is Web Mercator:

y = ln(tan(45˚ + latitude / 2))

= ln(tan(45˚ + 38.889814˚ / 2))

= 0.73781742861

y coord = (180˚ - y * (180 / π)) / 360˚ * worldSize

= (180˚ - 42.27382˚) / 360˚ * 1048576

= 401,156

OK, so (x: 299904, y: 401156) may be a great internal representation, but it's awkward and you have to know what zoom level it applies to. We can represent the same world coordinate by storing the zoom level and dividing the x and y components by the tile size. Our Washington Monument coordinate then becomes: (585.7471, 783.5067, z11). One nice thing about storing coordinates this way is that it's really simple to transform them to different zoom levels.

Web Mercator -> Tile Coordinates

Now we're making progress, because we have almost exactly what we need to ask the server for a tile that contains this point: we just drop the sub-integer precision and ask for (585/783/11).

If we're drawing a vector tile, it will have geometry data for all the things on the map that we need to draw around our Washington Monument Cat. The Mapbox vector tile spec specifies that tiles should have a defined extent that they use internally for coordinates. For a tile with an extent of 1024, (0,0) would be the top left corner of the tile and (1024,1024) would be on the bottom right.

Whatever tile coordinates come in, Mapbox GL internally normalizes to an extent of 8192 — since our tiles are (kind of) 512 pixels wide, that means our tile coordinates have a precision of 512/8192 = 1/16th of a pixel. To represent our Washington Monument coordinates in tile coordinates, we just multiply the remainder by the extent, so:

(x: 585.7471, y: 783.5067, zoom: 11)

Becomes:

Tile: 585/783/11

Tile Coordinates: (.7471 * 8192, .5067 * 8192) = (x: 6120, y: 4151)

Tile Coordinates -> 4D GL Coordinates When Mapbox GL loads a map tile, it pulls together all the data for the tile and turns it into a representation the GPU can understand. In general, the representation we give to the GPU is specified in tile coordinates — the advantage here is that we only have to build the tile once, but then we can ask the GPU to quickly draw the same tile with different bearing, pitch, zoom, and pan parameters.

The code we have that runs on the GPU is called a "shader": it takes tile coordinate inputs and outputs four dimensional "GL Coordinates" in the form (x, y, z, w). Wait, four dimensions? Don't worry, it's just for doing math.

x and y are pretty straightforward left/right and up/down. z is only slightly trickier: it represents position along an axis directly orthogonal to your screen. w is the more mysterious component, but it's necessary for generating the "perspective" effect: you can think of it as a "scaling" parameter for the other components, or as a measure of how far away they are from the viewer. When we draw labels, it's very useful to know the w component of a point, because it gives us a measure of how large the items around us will be (a higher w value means the perspective projection will make everything around our point smaller when it ultimately displays on the screen).

Converting from tile coordinates to GL coordinates involves applying a lot of "transformations" to the coordinates: one to apply perspective, one to move the imaginary camera away from the map, one to center the map, one to rotate the map, one to pitch the map, etc. Each one of these transformations can be represented as a 4D matrix, and all of the matrices can be combined into one matrix that applies all the transformations in one mathematical operation. These transformations build on top of some pixel-based parameters (size of the viewport, idealized size of tile) so we sometimes talk about the resulting coordinates as "pixel" coordinates although it's a fairly indirect connection. You might also see this coordinate system referred to as "clip space".

Let's see what that looks like for a very specific view of our map. Note that each tile has its own projection matrix: before going into GL coordinates, we first have to go back from tile coordinates to world coordinates so that the points from different tiles all have the same frame of reference.

Zoom level: 11.6

Map Center: 38.891/-77.0822 (over Washington DC)

Bearing: -23.2 degrees

Pitch: 45 degrees

Viewport dimensions: 862 by 742 pixels

Tile: 585/783/11 (covering a portion of Washington DC)

Gives a transformation matrix of:

| x | y | z | w | |

|---|---|---|---|---|

| x | 0.224 | -0.079 | -0.026 | -0.026 |

| y | -0.096 | -0.184 | -0.062 | -0.061 |

| z | 0.000 | 0.108 | -0.036 | -0.036 |

| w | -503.244 | 1071.633 | 1469.955 | 1470.211 |

There's way too much to unpack there in one devlog, but if you want to look at all the individual transformations to get a feel for how they fit together to make a 3D projection, and how they change as you move around the map, check out this GL coordinate transformation demo I made.

To get from tile coordinates to GL coordinates, we just turn the tile coordinates into a 4D vector with a z-value of 0 (ignoring buildings for now, sorry @lbud!) and "neutral" w-value of 1. We then apply the transformation matrix to get our GL coordinate, so:

(x: 6120, y: 4151, z: 0, w: 1)

Becomes:

| x | y | z | w | |

|---|---|---|---|---|

| input x = 6120 | 0.224 * 6120 = 1370.88 | -0.079 * 6120 = -483.48 | -0.026 * 6120 = -159.12 | -0.026 * 6120 = -159.12 |

| input y = 4151 | -0.096 * 4151 = -398.496 | -0.184 * 4151 = -763.784 | -0.062 * 4151 = -257.362 | -0.061 * 4151 = 253.311 |

| input z = 0 | 0.000 * 0 = 0 | 0.108 * 0 = 0 | -0.036 * 0 = 0 | -0.036 * 0 = 0 |

| input w = 1 | -503.244 * 1 = -503.244 | 1071.633 * 1 = 1071.633 | 1469.955 * 1 = 1469.955 | 1470.211 * 1 = 1470.211 |

| Output (Summed) | 469.14 | -175.631 | 1053.473 | 1057.78 |

😬 (yikes, the numbers don’t exactly match up with the machine output below! My excuse is that I did the math by hand at low precision…)

(x: 472.1721, y: -177.8471, z: 1052.9670, w: 1053.7176)

When you pan/rotate/tilt our map and it animates at a smooth 60 fps, what's happening is that we're changing the transformation matrix and then passing that modified matrix to the GPU, which does a massively parallel (and mind-bogglingly fast) application of that transformation matrix to all the points it needs to draw.

4D GL Coordinates -> 2D Pixels on your screen Our shader code is done once it outputs GL Coordinates, but OpenGL still does some more work to turn the coordinates into pixel locations on your screen.

First, it transforms to "Normalized Device Coordinates" by dividing the x, y, z components of the GL Coordinate by the w component. The resulting values look like:

x: -1,1 --> left to right of viewport

y: -1,1 --> bottom to top of viewport

z: -1,1 --> depth (not directly visible)

Any values outside of the range [-1,1] indicate points that are off the screen — they are (kind of) thrown away. You can see that another way to think of w is as "the bounds of what coordinates are visible at this distance".

In our example, the GL Coordinates:

(x: 472.1721, y: -177.8471, z: 1052.9670, w: 1053.7176)

Become NDC:

(472.1721 / 1053.72, -177.8471 / 1053.72, 1052.9670 / 1053.72)

-> (x: 0.4481, y: -0.1688, z: 0.9993)

The z-value of NDC doesn't affect the 2D pixel coordinates. Instead, it's used for combining multiple layers (for instance, a bridge drawn over a river). The z-value tells the GPU which points are "in front" of other points: for opaque layers only the frontmost layer will show, but for translucent layers the z-order can be used for blending. Actually, I don't really know what I'm talking about here: ask @lbud!

Finally, OpenGL translates NDC to pixel coordinates based on the size of the viewport:

In our case, the viewport is 862x742 pixels, so:

Pixel Coordinates: (NDC.x * width + width / 2, height / 2 - NDC.y * height)

= (0.4481 * 862 + 431, 371 - (-0.1688 * 742))

= (x: 624, y: 434)

🎉

- Mali Performance series

- Mali GPU Application Optimization Guide

- ARM/Mali Performance and Optimization Tutorials

- Adreno OpenGL ES Developer Guide

- Mozilla list of Mobile GPU optimization (older)

- Optimizations for Tile based deferred GPUs

- PowerVR Performance Recommendations

- PowerVR Presentations

- PowerVR Documentation

- Older PowerVR blog entries

- http://blog.imgtec.com/powervr/a-look-at-the-powervr-graphics-architecture-tile-based-rendering

- http://blog.imgtec.com/powervr/optimizing-graphics-applications-for-powervr-gpus

- http://blog.imgtec.com/powervr/graphics-cores-trying-compare-apples-apples

- http://blog.imgtec.com/powervr/understanding-opengl-es-multi-thread-multi-window-rendering

- http://blog.imgtec.com/powervr/powervr-gpu-opengl-es-graphics-mobile-for-ten-years-p1

- http://blog.imgtec.com/powervr/powervr-gpu-opengl-es-graphics-mobile-for-ten-years-p2

- http://blog.imgtec.com/powervr/how-to-improve-your-renderer-on-powervr-based-platforms

- http://blog.imgtec.com/powervr/micro-benchmark-your-render-on-powervr-series5-series5xt-and-series6-gpus

- http://blog.imgtec.com/powervr/powervr-graphics-we-render-funny

1. Get the plugin for Android Studio

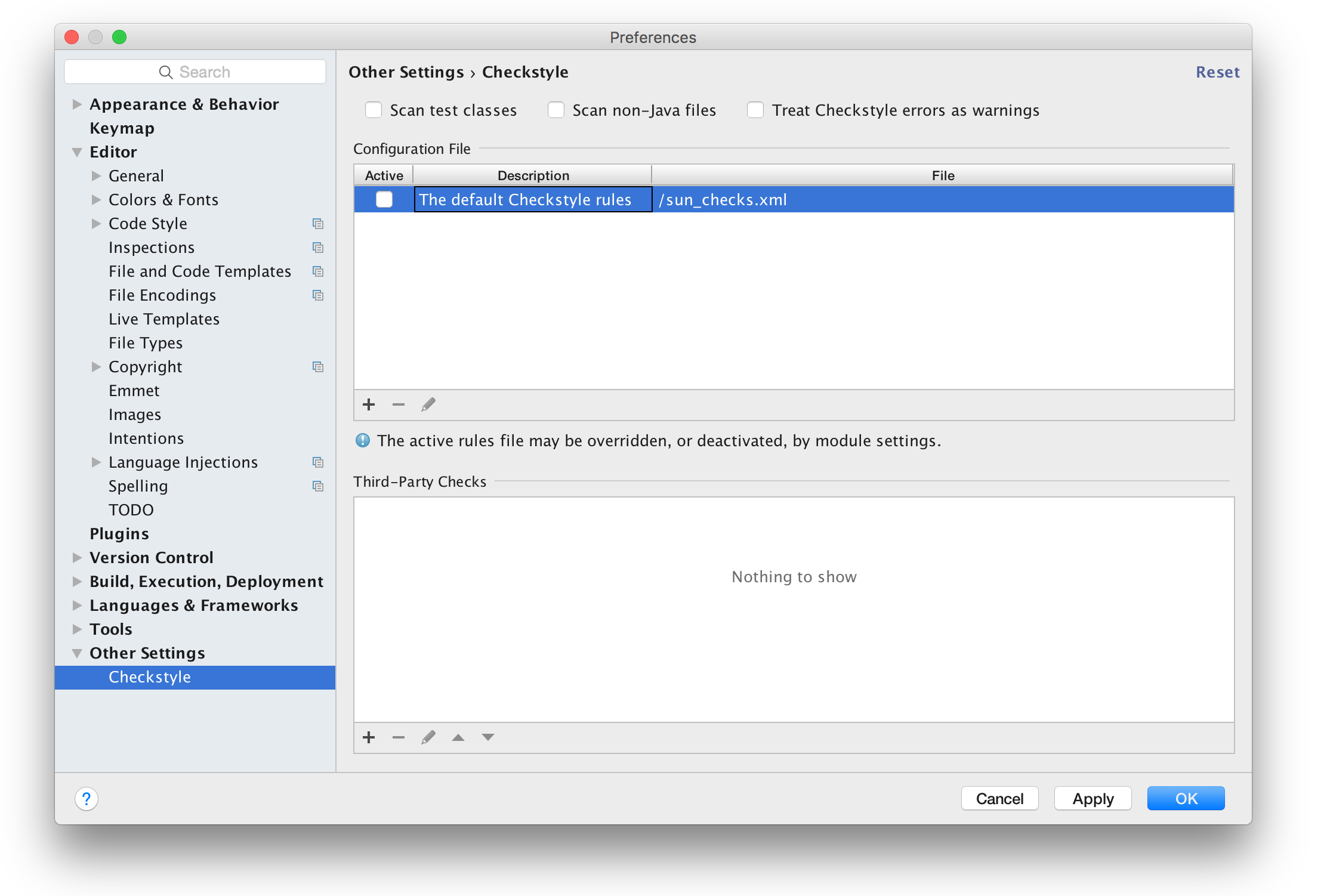

Adding this plugin provides real-time feedback against a given CheckStyle profile by way of an inspection. to add, grab the Checkstyle-idea plugin, install and restart Studio.

You can install the plugin from within Android Studio by opening the Preferences, selecting Plugins in the sidebar, and clicking on Browse repositories…. Search for CheckStyle-IDEA, install and restart Android Studio.

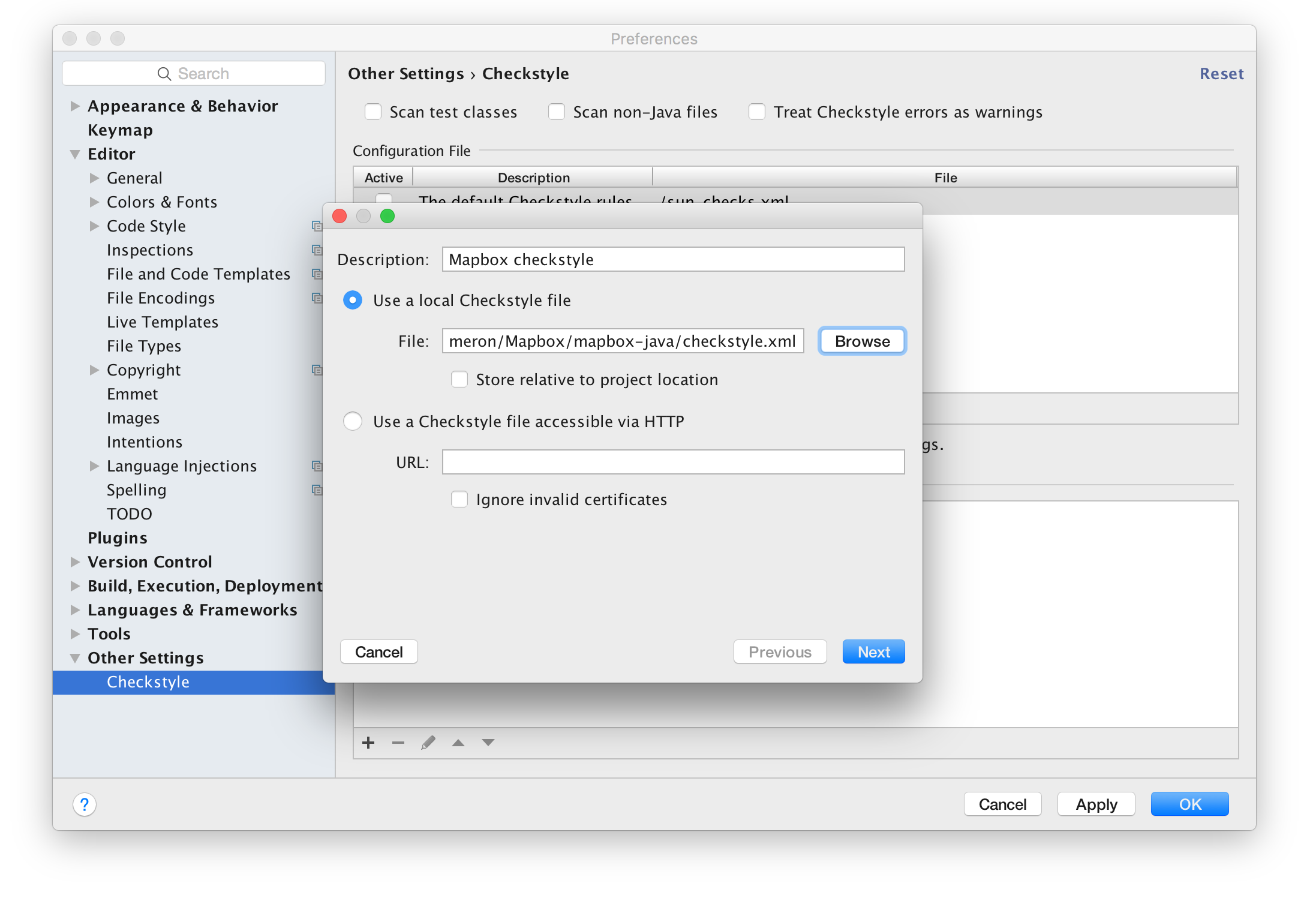

2. Add the projects checkstyle

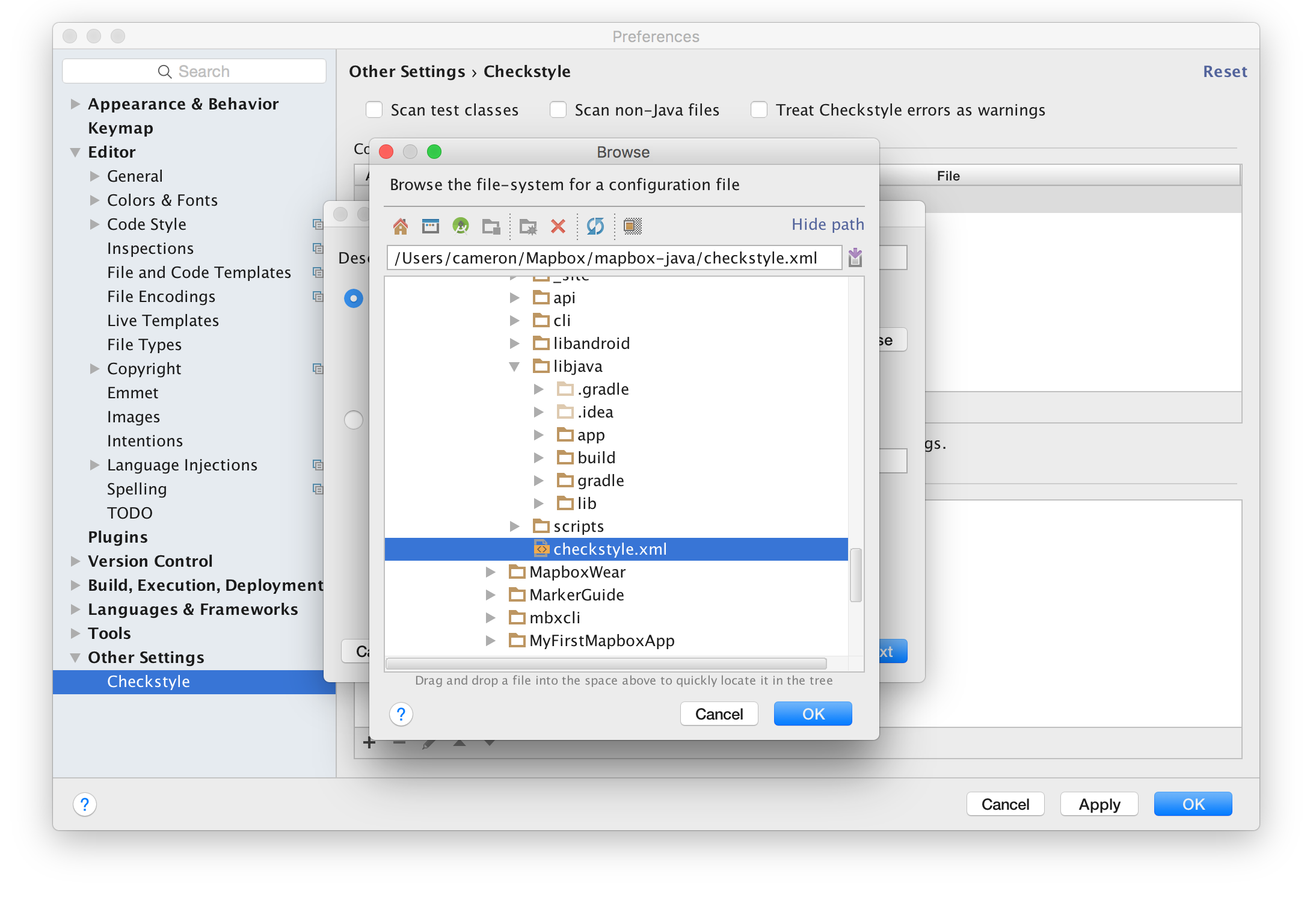

Open Preferences in Android Studio and navigate to Other Settings. Add the checkstyle by pressing the plus button:

and grabbing the checkstyle.xml file found in the cloned repo:

Give a description click next and then finish:

Make sure to check the new style to actually apply it in Android Studio. Last thing I do (but is an optional) is to check the Treat Checkstyle errors as warnings.

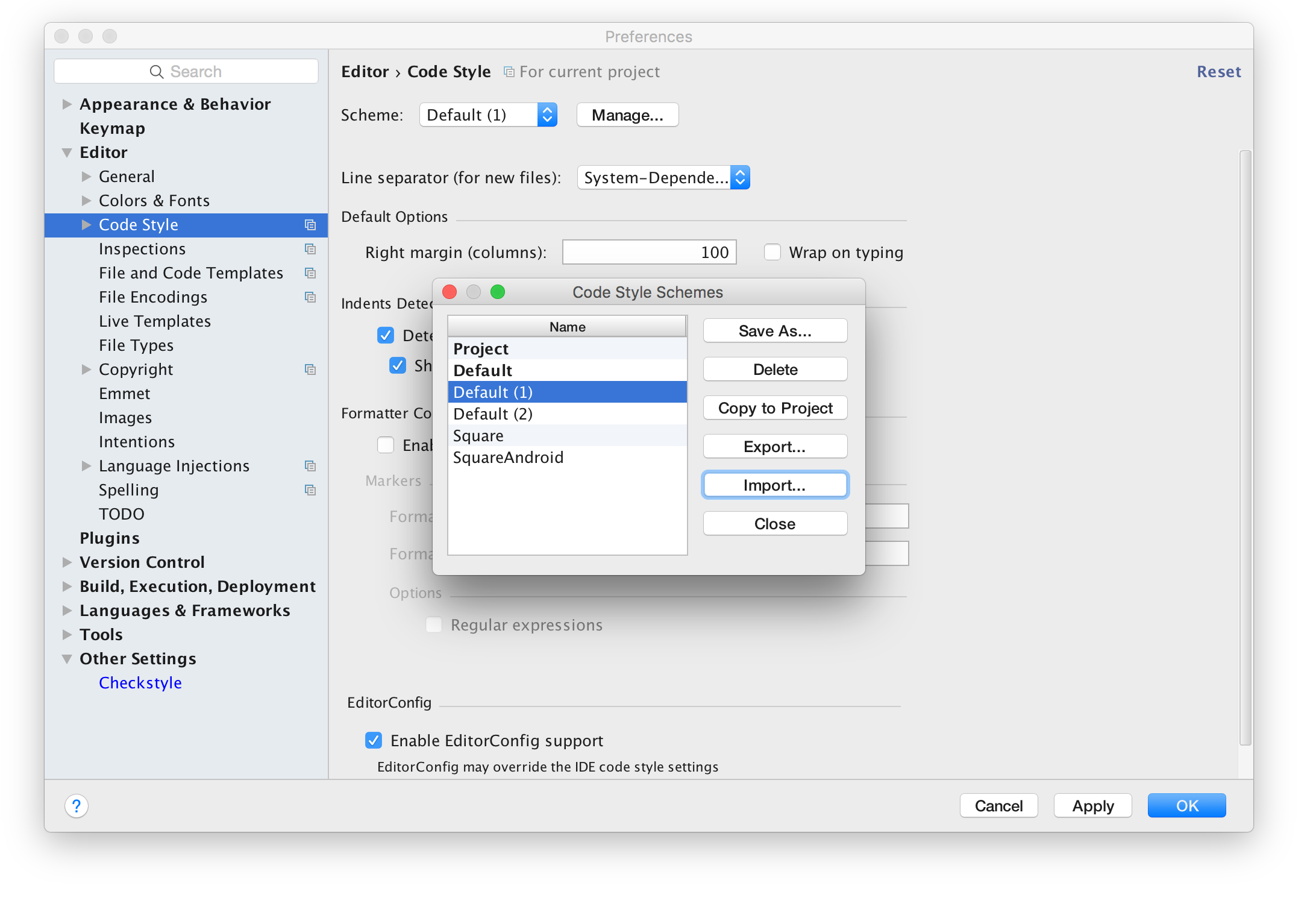

3. Change the project code style

To make the formatting quick and easy, you'll most likely want to change the code style set in Android studio. Open Preferences.. in Android Studio if it isn't open already and select Editor -> Code Style at the top under Scheme click the manage button:

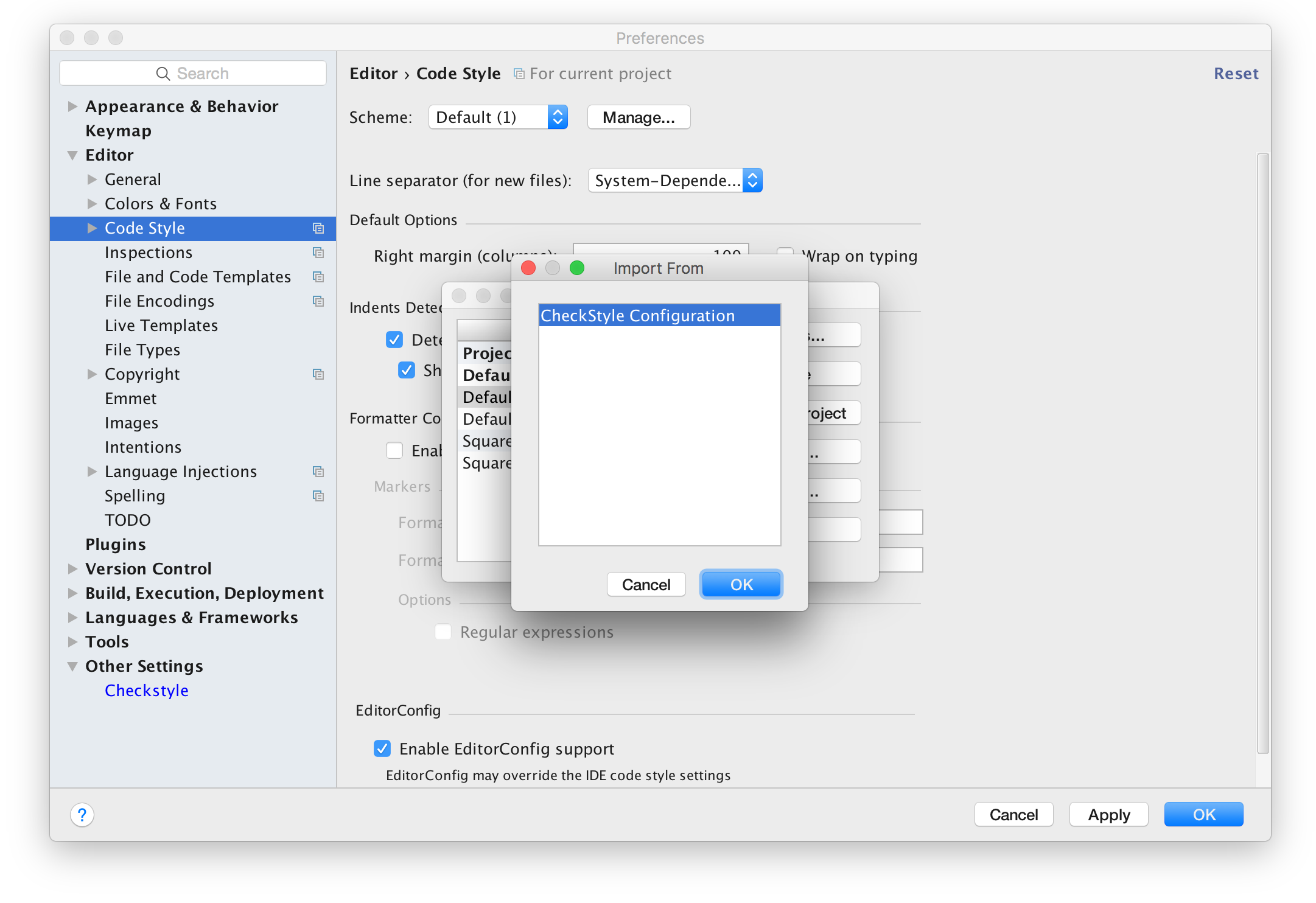

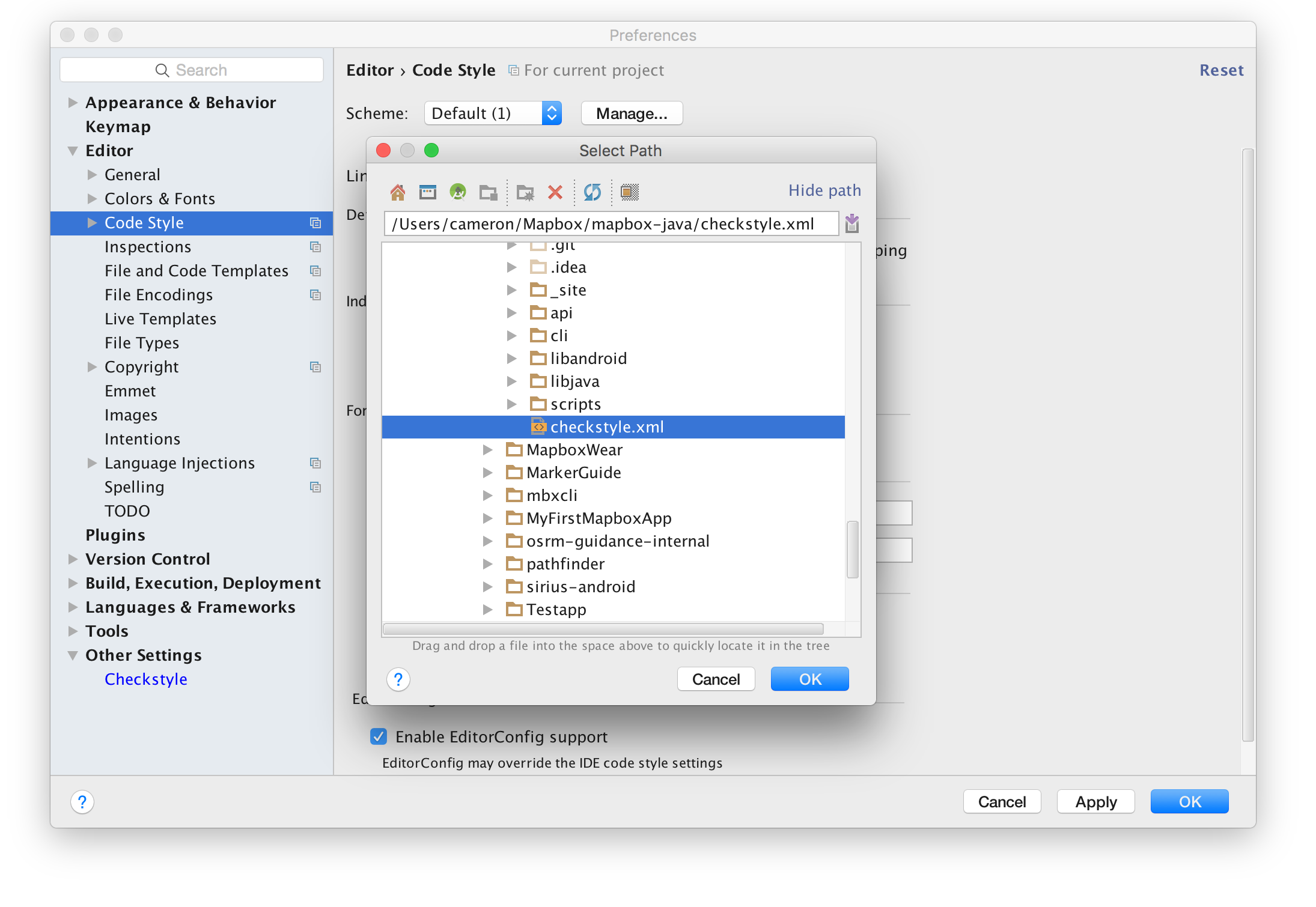

Chose either project (to change the project style only) default, or make a new on. Click import and add the checkstyle.xml file:

Ensure that the style you imported the checkstyle to is selected in the dropdown menu now and click apply. Now when you are in a java file, all you have to do is press [command][option][l] (on Mac) and the file indentions and other formatting mistakes.

This document describes the required changes needed to update your codebase using v5 of the SDK to the 6.0.0 version.

Java 8:

The 6.0.0 version of the Mapbox Maps SDK for Android introduces the use of Java 8. To fix any Java versioning issues, ensure that you are using Gradle version of 3.0 or greater. Once you’ve done that, add the following compileOptions to the android section of your app-level build.gradle file like so:

android {

...

compileOptions {

sourceCompatibility JavaVersion.VERSION_1_8

targetCompatibility JavaVersion.VERSION_1_8

}

}

This can also be done via your project settings (File > Project Structure > Your_Module > Source Compatibility / Target Compatibility). This is no longer required with Android Studio 3.1.0, as the new dex compiler D8 will be enabled by default.

Adding the compileOptions section above also solves the following error when updating the Maps SDK version:

Static interface methods are only supported starting with Android N (--min-api 24): com.mapbox.geojson.Geometry com.mapbox.geojson.Geometry.fromJson(java.lang.String)

Message{kind=ERROR, text=Static interface methods are only supported starting with Android N (--min-api 24): com.mapbox.geojson.Geometry com.mapbox.geojson.Geometry.fromJson(java.lang.String), sources=[Unknown source file], tool name=Optional.of(D8)}

The static interface method used in com.mapbox.geojson.Geometry is compatible with any minSdkVersion (see Supported Java 8 Language Features and APIs).

Using expressions vs functions / filters

You don't need to worry about using expressions in your project if:

- you don't style any part of your map at runtime

- your project only uses professionally-designed Mapbox map styles (Streets, Outdoors, Satellite Streets, etc.)

- your project only uses a style that you've designed via Mapbox Studio

We've removed the old way of using Stop functions for runtime styling in the 6.0.0 release of the Maps SDK, so you must use expressions going forward.

If you don't want to re-write your code with runtime styling expressions, you don't have to stop using Mapbox and stop building your project. Just use a version of the Mapbox Maps SDK for Android that is below 6.0.0 and you'll be completely fine until you decide to use 6.0.0 or higher!

The 6.0.0 release changes the syntax used to perform apply data-driven styling of the map. Functions and filters have been replaced by expressions. Any layout property, paint property, or filter can now be specified as an expression. An expression defines a formula for computing the value of the property using the operators described below. The set of expression operators provided by Mapbox GL includes:

- Mathematical operators for performing arithmetic and other operations on numeric values

- Logical operators for manipulating boolean values and making conditional decisions

- String operators for manipulating strings

- Data operators, providing access to the properties of source features

- Camera operators, providing access to the parameters defining the current map view

Expressions tips & tricks:

- Stops should be listed in ascending order

- If you’ve got a list of stops where the

Objectvalue for each stop is a number, the stops must be in the order of their values from lowest to highest. In the example below, the two stops that affect the radius of theCircleLayer's circles, are placed in the correct order because 2 is less than 180:

- If you’ve got a list of stops where the

circleRadius(

interpolate(

exponential(1.75f),

zoom(),

stop(12, 2),

stop(22, 180)

)),

- Wrap Android

intcolors with the color expression- The Mapbox style specification exposes the

rgb()andrgba()color expressions. To convert an Androidintcolor to a color expression, you need to wrap it withcolor(int color). For example:

- The Mapbox style specification exposes the

stop(0, color(Color.parseColor("#F2F12D"))).

- When you don’t type your

get()expressions explicitly, it is likely that the expression will implicitly expect them to be a certain type — and fail at runtime. They fall back to the style-spec default if they aren’t the expected type. To alter this behavior, usetoString(),toNumber(),toColor(), ortoBoolean()to explicitly coerce them to the desired type.

Plugin support:

- Deprecation of setXListener

- With the 6.0.0 release we are allowing listeners to be set through

addXListenerto support notifying multiple listeners for plugin support. Passing anullin the add method will listener not result in clearing any listeners. Please use equivalentremoveXListener method instead.

- With the 6.0.0 release we are allowing listeners to be set through

Location layer vs LocationView/trackingsettings:

With the 6.0.0 release, we are removing the deprecated LocationView and related tracking modes from the Maps SDK. These were deprecated in favor of the Location Layer Plugin in the plugins repository. The plugin directly renders the user’s location in GL instead of relying on a synchronized Android SDK View. The 0.5.0 version of the Location Layer Plugin is compatible with the 6.0.0 release of the Maps SDK.

LatLngBounds:

- The latitude values should be within a range of -90 to 90 when a

LatLngBoundsobject is created. - LatLngBounds.Builder will create bounds with the shortest possible span.

// The following bounds will be crossing the antimeridian (new date line)

LatLngBounds latLngBounds = new LatLngBounds.Builder()

.include(new LatLng(10, -170))

.include(new LatLng(-10, 170))

.build();

// latlngBounds.getSpan() will be 20, 20

// The same could be done with

latLngBounds = LatLngBounds.from(10, -170, -10, 170);

Note that if you need bounds created out of the same points but spanning around different part of the earth (forming a much wider bounds) you can use:

LatLngBounds latLngBounds = LatLngBounds.from(10, 170, -10, -170);

// latlngBounds.getSpan() will be 20, 340

- The order of parameters for

LatLngBounds.union()has changed. The parameter order for theunion()method has been made consistent with other methods. That is: North, East, South, West.

GeoJSON changes:

- GeoJSON objects are immutable

- All

setCoordinates()methods are deprecated - Geometry instantiation methods.

The fromCoordinates() method on classes which implement Geometry, were renamed to fromLngLat(). The method name for the Point class is fromLngLat().

The Point, MultiPoint, LineString, MultiLineString, Polygon, and MultiPolygon classes can no longer can be instantiated from an array of double. A List<Point> should be used instead.

Pre-6.0.0 way:

// release 5.x.x:

Polygon domTower = Polygon.fromLngLats(new double[][][] {

new double[][] {

new double[] {

5.12112557888031,

52.09071040847704

},

new double[] {

5.121227502822875,

52.09053901776669

},

new double[] {

5.121484994888306,

52.090601641371805

},

new double[] {

5.1213884353637695,

52.090766439912635

},

new double[] {

5.12112557888031,

52.09071040847704

}

}

});

6.0.0+ way:

// release 6.0.0

List<List<Point>> lngLats = Arrays.asList(

Arrays.asList(

Point.fromLngLat(5.12112557888031, 52.09071040847704),

Point.fromLngLat(5.121227502822875, 52.09053901776669),

Point.fromLngLat(5.121484994888306, 52.090601641371805),

Point.fromLngLat(5.1213884353637695, 52.090766439912635),

Point.fromLngLat(5.12112557888031, 52.09071040847704)

)

);

Polygon domTower = Polygon.fromLngLats(lngLats);

- Changes to getter methods’ names:

Feature and FeatureCollection getter methods don’t start with the word get any more. For example:

-

Feature.getId()→Feature.id() -

Feature.getProperty()→Feature.property() -

FeatureCollection.getFeatures()→FeatureCollection.features(), etc -

The